I caught an exception. Now what?

I can’t draw to save my life, but I love comics, especially ones that capture the essence of what it’s like to be a software developer. They capture the shared pain we all go through and temper it with humor. Luckily, I no longer work for large corporations, so it’s easier now to read Dilbert and laugh without also wincing.

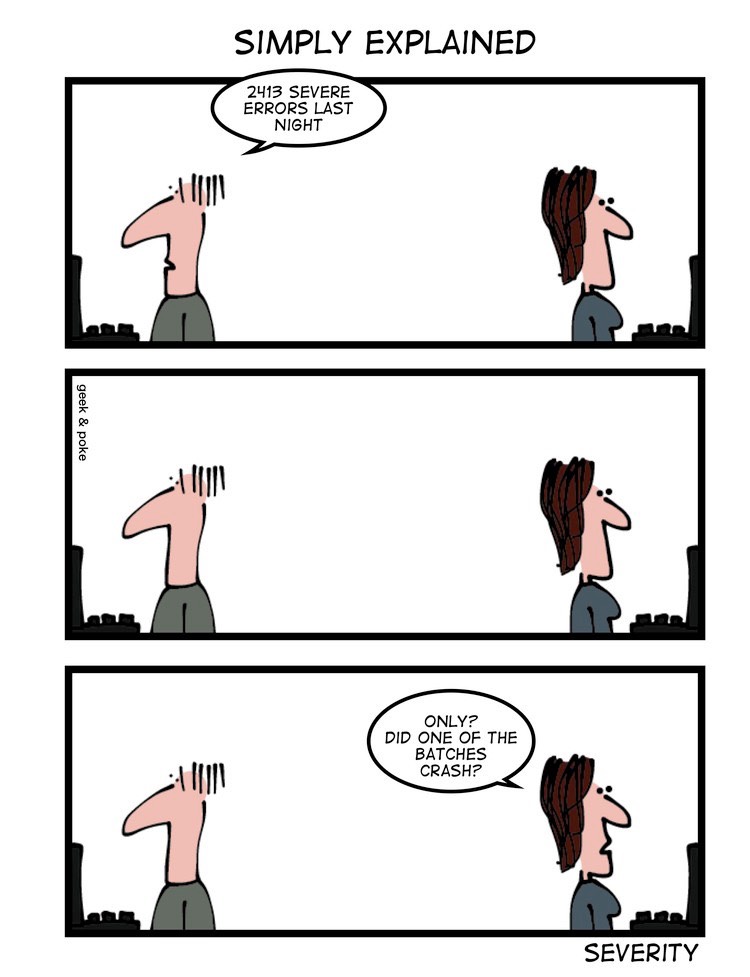

But this comic from Geek&Poke hit me hard. The pain is just too fresh.

This spoke to me, drenching me in waves of nostalgia. Of course, nostalgia is the wrong word. It indicates a longing and wistfulness that I do not feel in any way. Instead, it was the stark regret of a life of sorting through hundreds of errors, the result of a “Log Everything” exception handling strategy. It is not a happy memory.

And of course, all those errors I mentioned were severe.

🔗All errors are not created equal…

Why is it that all exceptions are treated the same? They all seem to be logged as severe. Exception mean the code failed; the exception was not handled, and the code was unable to continue. The notion of a “minor error” does seem questionable. And so, every error is black or white. The code either works or it doesn’t. There is no room for shades of gray. Every exception is severe.

Every organization has its own unwritten rules on how to take care of these supposedly severe errors. The developer has the best of intentions: a desire to know when errors occur. However, it results in the logging of every single exception thrown, and most times those exceptions end up ignored. Not at first, of course. But after the first couple of investigations, a sense of “The Boy Who Cried Wolf” sets in and the errors go unheeded. That is, until something goes horribly wrong.

Exceptions, by their very nature, are supposed to be exceptional. There are normal actions that occur through everyday use of your application, and then there are exceptions which must be reported.

And so, as software developers, we log and report the exceptions. They usually fall into one of two buckets: warnings or errors. Part of the fault lies with generally-used logging libraries that don’t give us much ability to differentiate any further. Sometimes the developer writing code randomly picks one of the two buckets, and even then the difference seldom holds any true meaning.

But this method describes an exception’s severity, which is the wrong approach. Instead, we should be concentrating on a different axis entirely — one that requires us to think about our exceptions in a completely different way.

🔗A different approach to exception handling

Let’s think about exceptions in different terms. Suppose we run a piece of code and an exception occurs. What would happen if we just ran that code again right after it failed? We’d see that a few categories start to emerge.

🔗Transient exceptions

Let’s consider a common scenario. You have some code that updates a record in the database. Two threads attempt to update the row at the same time, resulting in an optimistic concurrency exception. A DbUpdateConcurrencyException is thrown on the losing thread:

System.Data.Entity.Infrastructure.DbUpdateConcurrencyException was unhandled by user code HResult=-2146233087 Message=Store update, insert, or delete statement affected an unexpected number of rows (0).

This is an example of a transient exception. Transient exceptions appear to be caused by random quantum fluctuations in the ether. If the failing code is immediately retried, it will probably succeed.

🔗Semi-transient exceptions

The next category involves failures such as connecting to a web service that is temporarily down. An immediate retry will likely not succeed, but retrying after waiting a bit (from a few seconds up to a few minutes) might.

These are semi-transient exceptions. Semi-transient exceptions are persistent for a limited time but are still resolved relatively quickly.

Another common example involves the failover of a database cluster. If a database has enough pending transactions, it can take a minute or two for all of those transactions to commit before the failover can complete. During this time, queries are executed without issue, but attempting to modify data will result in an exception.

It can be difficult to deal with this type of failure, as it’s frequently not possible for the calling thread to wait around long enough for the failure to resolve.

🔗Systemic exceptions

Outright flaws in your system cause systemic exceptions, which are straight-up bugs. They will fail every time given the same input data. These are our good friends NullReferenceException, ArgumentException, dividing by zero, and a host of other idiotic mistakes we’ve all made.

Besides the usual mistakes in logic, you may have encountered versioning exceptions when deserializing objects. These exceptions occur when you serialize one version of an object, then attempt to deserialize it into another version of the object. Like the other exceptions mentioned above, you can rerun your code as often as you like and it’ll just keep failing.

In short, these are the exceptions that a developer needs to look at, triage, and fix—preferably without all the noise from the transient and semi-transient getting in the way of our investigation.

🔗Exception management, the classic way

Many developers, at one point or another, will create a global utility library and write code similar to the following:

public static void Retry(Action action, int tries)

{

int attempt = 0;

while(true)

{

try

{

action();

return;

}

catch (Exception)

{

attempt++;

if(attempt >= tries)

throw;

}

}

}

While this might work in some transient situations, it becomes messy when you throw transactions into the mix. For example, this code could be wrapped within a transaction and the action itself may start its own transaction. Maintaining the proper TransactionScope can be a challenge.

In any case, some exceptions will escape this construct and will be thrown, making their way into our log files or error reporting systems. When that happens, what will become of the data that was being processed? If we were trying to contact our payment gateway to charge a credit card for an order, we would have lost that information and probably the revenue that went with it, too. As the exception bubbled up from the original code, we lost the parameters to the method we originally called.

At that point, we have no choice but to display an error screen to our user and ask them to try again. And how much faith would you put in a payment screen that said, “Something went wrong, please try again”? (In fact, most payment screens explicitly tell you not to do this and actually take steps to prevent it.)

Furthermore, to avoid data loss, you’d need to log not only the exception but also every single argument and state variable involved in the request. Unfortunately, hindsight is always 20/20 on what information should have been logged.

So let’s take the next step and make that logged data more explicit—modeling the combination of method name and parameters as a kind of data transfer object (DTO) or a message.

🔗Switching to a message-based architecture

With a “conventional” application comprised of many functions or methods all running within one process, we exchange data between modules by passing values into functions, then wait for a return value. This expectation of an immediately available return value is limiting, as nothing can proceed until that return value is available.

What if we structured our applications differently so that communicating between modules didn’t have to return a value and we didn’t have to wait for a response before proceeding? For example, instead of calling a web service directly from our client code and waiting for it to respond, we could bundle up the data needed for the web service into a message and forward it to a process that calls the web service in isolation. The code that sends the message doesn’t necessarily need to wait for the web service to finish; it just needs to be notified when the web service call is done.

Once we start sending messages instead of calling methods, we can build infrastructure to handle exceptions differently. In the process, we’ll gain all sorts of amazing superpowers. If there’s a failure, we can retry the message if necessary since nobody is waiting on an immediate return value. This retry ability is powerful and forms the basis for how we can build better handling mechanisms for all our exceptions.

🔗Handling transient exceptions

Consider this architecture: there’s a message object which contains all of the business data required for an operation and a different object which contains code to handle the message—a “message handler.” Since we wouldn’t want to have to remember to include error-handling logic in each and every message handler, it makes sense to have some generic infrastructure code wrap the invocation of our message handler code with the necessary exception handling and retry functionality.

With this architecture in place, we can apply a retry strategy to our messages as they come in. If the message handler succeeds without throwing an exception, we will be done. However, if an exception is thrown, our retry mechanism will immediately re-execute the message handler code. If we get another exception when the code is re-executed, we can try again and continue retrying until either the code succeeds or we reach some pre-determined cap on the number of retries.

Because transient failures are unlikely to persist for very long, this kind of infrastructure support for immediate retries would automatically take care of these types of exceptions.

🔗Handling semi-transient exceptions

Recall the earlier example of integrating with a third-party web service that is prone to occasional outages. Immediately retrying the code that calls it three or five or even 100 times isn’t likely to help. For semi-transient exceptions like this, we should add a second retry strategy that would reprocess the message after some predetermined delay.

It’s possible to have several rounds of retries with this strategy and we can increase the delay each time. For example, after the first failure, we could set the message handler to retry after 10 seconds. If that fails, we can retry after 20 seconds, then 40 seconds, and so on until we hit our predetermined maximum. (Many networks use this kind of exponential backoff to retransmit blocks of data in the event of network congestion.)

With this strategy in place, we can be confident that almost all semi-transient errors will be resolved automatically, at which point the message will be processed successfully. If a message repeatedly fails, even after both sets of retries, we can be fairly sure that we are now dealing with a systemic exception.

🔗Handing systemic exceptions

If we have a systemic exception our retry logic may never break free, preventing a thread from doing useful work—effectively “poisoning” the queue, unless the message is removed by some other means. For this reason, messages which cause systemic exceptions are called poison messages.

Our message-processing infrastructure must move these poison messages to the side, into some other queue so that regular processing can continue. At this point, we are reasonably confident that the exception is an actual error that needs some investigation.

It’s likely that someone would need to take a closer look at these poison messages to figure out what went wrong in the system before deciding what to do with them. Perhaps there was a simple coding error, and we can deploy a new version that will fix it. After the fix is deployed, because we still have our business data in a message, we can still retry it by returning the message to its original queue. Furthermore, we didn’t lose any business data even though there was a bug in the system!

In an environment with multiple queues serving regular message processing logic, it would make sense to create a single centralized error queue to which all poison messages could be moved. That way, we would only need to look at a single queue to know that everything’s working properly or how many system errors we need to deal with.

As an added benefit of moving poison messages to an error queue, the developer doesn’t have to hunt through log files to get the complete exception details. Instead, we can include the stack trace along with the message data. Then the developer will have both the business data that led to the failure as well as the details of the failure itself—something not usually captured with standard logging techniques.

🔗Summary

Getting 2413 severe exceptions overnight on a regular basis is a huge organizational failure, in addition to a technical one. It’s bad enough that, due to the cognitive load of all these exceptions, they will probably get forgotten in an archive, unlikely ever to get fixed, relegated to a DBA to clean out periodically. What’s worse is all of the countless developer hours, an organization’s most precious resource, wasted in logging all these exceptions that can never be addressed practically.

Three things are needed to deal with these exceptions in a better way. First, we need to analyze exceptions based on how likely they are to occur, not on severity. Second, we need to employ an architecture based on asynchronous messaging so that we have the ability to replay messages as many times as necessary to achieve success. And finally, we need messaging infrastructure that takes into account the differences between transient, semi-transient, and systemic exceptions.

Once we do this, the transient and semi-transient exceptions that seem to “just happen” through no real fault of our own tend to fade away. Deadlocks, temporarily unavailable web services, finicky FTP servers, SQL failover events, unreliable VPN connections—the vast majority of these issues just resolve themselves through the retries, leaving us to focus on fixing the exceptions that actually mean something.

Additionally, we can use this pattern to add value to our system and our organization. Processes that fail in a message handler don’t have to result in an error message displayed to a customer. This relieves pressure on first-level support, as many exceptions happen behind the scenes without the customer’s knowledge, leading to a drastic reduction in support calls.

So if you’ve ever had to scan through thousands of “severe” exceptions looking for the real problem, you may want to take a look at message-based architectures (starting with our tutorial on message replay mechanics) to ease the burden of error reporting, freeing you up for more important things…

Like reading comics.

Share on Twitter

Share on Twitter