MSMQ performance improvements in NServiceBus 6.0

This post is part of a series describing the improvements in NServiceBus 6.0.

MSMQ was the very first NServiceBus message transport, and while not overly flashy, it got the job done. You could almost call MSMQ a finished product because, while it’s updated with each Windows release, it doesn’t really change much. It’s solid, reliable, dependable, and overall, it Just Works™.

One of the biggest changes we made in version 6.0 of NServiceBus (V6) is that the framework is now fully async1. The thing is, the MSMQ’s API in the .NET Framework hasn’t been updated to support async/await, so what could we do for the MSMQ transport in NServiceBus?

Make it go faster anyway. That’s what.

The switch from reserved threads operating synchronously but in parallel to truly async tasks has created an opportunity to squeeze every last drop of performance out of the MSMQ transport.

🔗V5: Threads

In previous versions of NServiceBus, we used multiple threads to process messages in parallel. In NServiceBus V5, you would configure parallel processing settings using the TransportConfig element in an App.config file like this:

<TransportConfig MaximumConcurrencyLevel="10" />

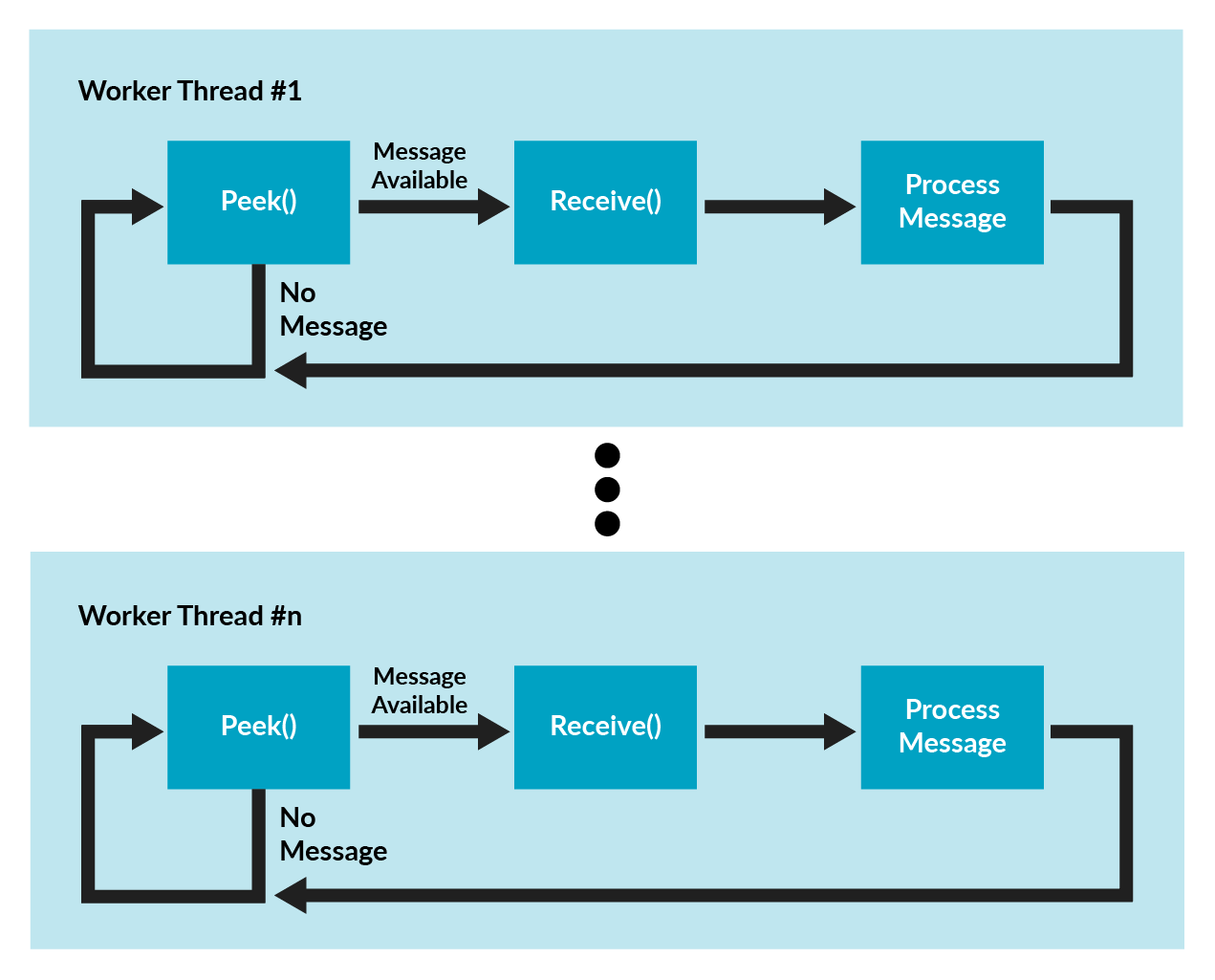

This would prepare 10 physical threads within the endpoint. Each thread would host its own message-processing pipeline that would operate relatively independent of the others. These independent NServiceBus threads would call the MessageQueue.Peek() method to check if there were any messages to be processed.

Each V5 processing thread is an independent message processor. As a result, each thread does its own peeking and then processes the message it discovers.

This pattern works well, but it doesn’t go as far as we’d like. Within each thread, all processing is synchronous, so any IO-bound tasks are destined to slow things down. All of these synchronous blocking calls in the message-processing pipeline also block the thread that’s doing the peek, and that prevents the thread from going back to the queue to check for additional messages—or doing anything else for that matter. Each thread is stuck in a silo, unable to do anything to help out its neighbors.

Peek() also throws an exception if no messages are available, and while exceptions are great for exceptional circumstances, message queues empty out all the time. Also, this particular exception, thrown whenever the queue is empty, can be very annoying to a developer when Visual Studio is configured to break on all exceptions.

🔗V6: Tasks

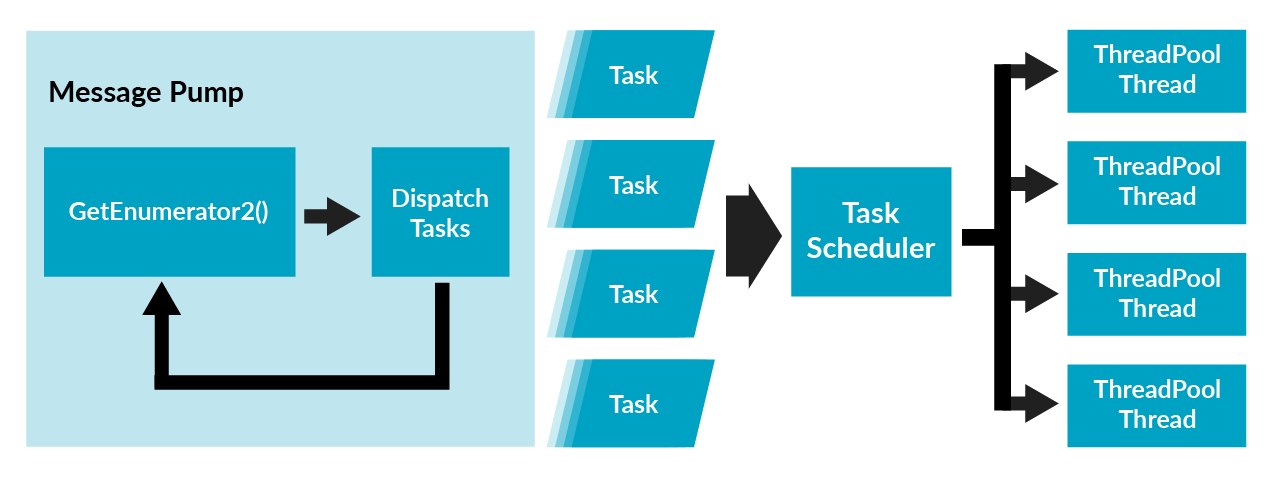

In V6, we’ve made things work a little differently. Instead of fixed threads, we use tasks throughout the framework, and the .NET TaskScheduler ensures that all tasks are executed in the most efficient way possible.

This also means that it’s easier for NServiceBus to manage parallel processing to maximum effect. The message pump now fetches waiting messages using the MessageQueue.GetMessageEnumerator2() method. For each message found, a new Task is created, and the .NET TaskScheduler determines the most efficient way to manage the overall workload, rather than relying upon a fixed number of processing threads.

In V5, the thread peeking the message queue becomes repeatedly blocked by all of the synchronous work that has to happen afterwards in order to fully process the message before peeking again. With all of the processing work represented as tasks in V6, the peeking thread is free to go right back to the queue to obtain more work. This ensures that the processing pipeline is always full of work, which in turn results in the best possible throughput.

Although there’s no fixed thread count like in V5, you’ll still be able to control the number of concurrently processed messages. We do that by using a semaphore2 to limit how many messages are dispatched via tasks to be processed, which controls the number of messages that can be “in flight” at any given time.

While we default to max(2, NumberOfLogicalProcessors) concurrent messages, you can configure the value to whatever you like:

endpointConfiguration.LimitMessageProcessingConcurrencyTo(10);

This feature allows you to control simultaneous connections to an overloaded SQL server or rate-limited web service. In these situations, you may even want to limit an endpoint to a single concurrent message. Of course, you can also use it to control the amount of CPU and memory consumed by an endpoint, which is important when those resources are limited.

At first blush, controlling the number in-flight messages in V6 might sound similar enough to controlling the number of fixed threads in V5. Given that, you might assume that the performance characteristics between versions would be roughly the same as well, but the results are pretty surprising.

🔗Results

In informal performance tests with no-op message handlers, we were able to achieve about twice as many messages per second in message throughput using a V6 endpoint with its Task-based message pump as compared to its V5-based counterpart.

We think this is a pretty significant speed boost, and it wouldn’t have been possible without the async API.

Of course, it’s important to note that most message handlers will not be no-ops but will instead be full of IO-bound code for interacting with databases, calling web services, and sending other messages, so the difference in your own code’s performance will vary. However, what is clear is that the more you are able to take advantage of async APIs within your own code, the better the throughput you’ll get.

🔗Summary

Although Microsoft provides no async API for MSMQ, we were able to achieve considerable performance improvements by using tasks instead of fixed threads to minimize wasted downtime during IO-bound operations.

More importantly, the performance improvements in the MSMQ transport illustrate an important lesson: switching to an asynchronous API can result in better application performance, even when some parts of the application are still synchronous. The bottom line is that the best time to adopt async/await is right now.

If you’re unsure how to get started, take a look at our async/await webinar series. When you make the jump to async/await, we think you’ll see the benefits too.

🔗Footnotes

1 Async/Await: It’s time!

2 Semaphore (programming) - Wikipedia

About the author: David Boike is a developer at Particular Software who likes things that are fast, like cheetahs, the Millennium Falcon, and the NServiceBus message pipeline.

Share on Twitter

Share on Twitter