How we achieved 5X faster pipeline execution by removing closure allocations

The NServiceBus messaging pipeline strives to achieve the right balance of flexibility, maintainability, and wicked fast…ummm…ability. It needs to be wicked fast because it is executed at scale. For our purposes, “at scale” means that throughout the lifetime of an NServiceBus endpoint, the message pipeline will be executed hundreds, even thousands of times per second under high load scenarios.

Previously, we were able to achieve 10X faster pipeline execution and a 94% reduction in Gen 0 garbage creation by building expression trees at startup and then dynamically compiling them. One of the key learnings of those expression tree adventures is that reducing Gen 0 allocation makes a big difference. The less Gen 0 allocation used, the more speed can be squeezed out of the message handling pipeline, which ultimately means more speed for our users.

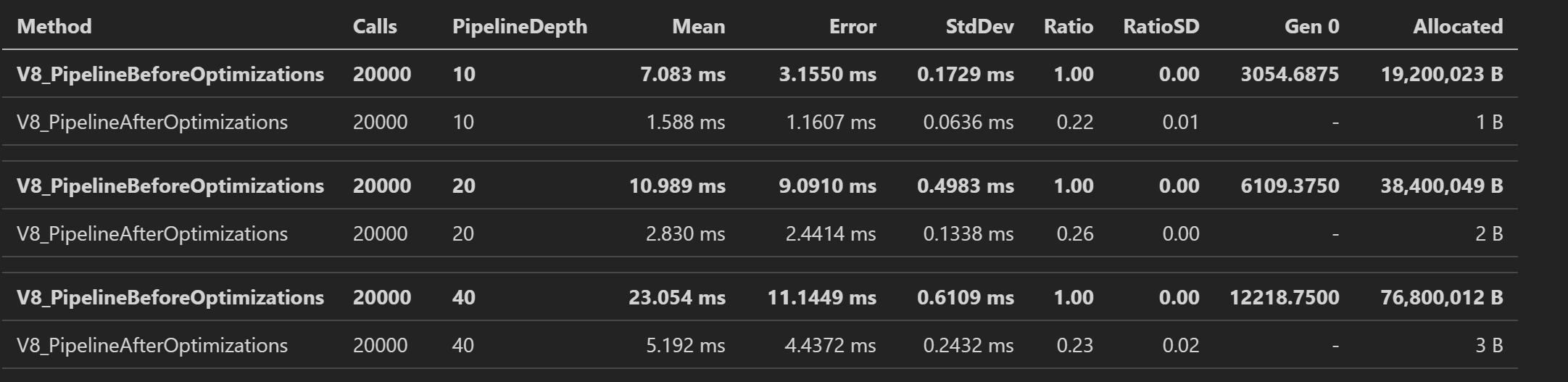

A common, but overlooked source of allocations is closure allocations. Originally, our message pipeline had, to use the scientific term, a gazillion of them. By getting rid of the major source of closure allocations in the pipeline, we’ve managed to get another five-fold increase in pipeline execution performance, as well as the complete removal of closure-related Gen 0 garbage creation. In this post, we’ll recap what closures look like, explain how they can be avoided, and show the tricks we’ve applied to the NServiceBus pipeline to get rid of them.

🔗Closures lurking beneath the surface

Closure allocations can be hard to spot. Before the introduction of the Heap Allocation Viewer for Jetbrains Rider or Clr Heap Allocation Analyzer for Visual Studio you’d have to either decompile the code or attach a memory profiler and watch out for various *__DisplayClass*, Action* or Func* allocations.

Closures can occur anywhere we have lambdas (i.e. Action or Func delegates) being invoked that access state that exists outside the lambda. Here’s an example:

static void MyFunction(Action action) => action();

int myNumber = 42;

MyFunction(() => Console.WriteLine(myNumber));

Here is the decompiled code for this snippet:

<>c__DisplayClass0_0 <>c__DisplayClass0_ = new <>c__DisplayClass0_0();

<>c__DisplayClass0_.myNumber = 42;

<Main>g__MyFunction|0_0(new Action(<>c__DisplayClass0_.<Main>b__1));

In the generated code, we can spot two allocations. The first allocation of the type c__DisplayClass0_0 represents the state class that will “host” the number, and a second allocation of type Action to be able to point to some compiler generated method called <>c__DisplayClass0_.<Main>b__1 that will eventually execute our Console.WriteLine.

All that just to print a number to the console. And keep in mind, closure allocations might not only be in your code; they can also occur when you use .NET classes like the ConcurrentDictionary.GetOrAdd.

🔗Bye bye closures

It’s possible to get rid of the closure and thus remove the extra display class and delegate allocation. Starting with .NET 5, we can mark the lambda as static with C# 9. In this way, the compiler will allow only state that is static or available inside the lambda to be accessed; otherwise, it will display an error.

int myNumber = 42;

MyFunction(static () => Console.WriteLine(myNumber));

error CS8820: A static anonymous function cannot contain a reference to 'myNumber'.

In the simple example, when the state is just a fixed number, the variable can be marked as a constant to get rid of the CS8820 compiler error.

const int myNumber = 42;

MyFunction(static () => Console.WriteLine(myNumber));

<Main>g__MyFunction|0_0(<>c.<>9__0_1 ?? (<>c.<>9__0_1 = new Action(<>c.<>9.<Main>b__0_1)));

Yet in reality, code is rarely that simple. What if the number comes from actual user input or is generated with random.Next()? When the input is not static the state must be passed into the lambda somehow. By changing the lambda from Action to Action<object> the lambda will accept a state object that can be passed from MyFunction into the action delegate.

static void MyFunction(Action<object> action, object state) => action(state);

int myNumber = 42;

MyFunction(static state => Console.WriteLine((int)state), myNumber);

<Main>g__MyFunction|0_0(<>c.<>9__0_1 ?? (<>c.<>9__0_1 = new Action<object>(<>c.<>9.<Main>b__0_1)), num);

IL_0023: box [System.Runtime]System.Int32

While this gets rid of the display class and the function allocation, unfortunately, the usage of object forces the compiler to emit a box statement. That is, the compiler will box the integer to an object which causes an unnecessary allocation to occur. Whenever possible, the state-based overloads should use generics instead.

static void MyFunction<T>(Action<T> action, T state) => action(state);

int myNumber = 42;

MyFunction(static number => Console.WriteLine(number), myNumber);

<Main>g__MyFunction|0_0(<>c.<>9__0_1 ?? (<>c.<>9__0_1 = new Action<int>(<>c.<>9.<Main>b__0_1)), state);

By ensuring the delegates have all their state available inside the closure and by using C# 9 static lambda support, we no longer allocate unintentionally.

Let’s see how we can apply this to the NServiceBus pipeline.

🔗The state captured in the pipeline

At its core, the NServiceBus pipeline is just a series of delegates of the shape Task Invoke(TInContext context, Func<TOutContext, Task> next) that get chained together to build the pipeline. By design, the state that is required for the execution of the pipeline is passed into the invocation as TInContext and passed out of the invocation into the next part of the pipeline as TOutContext. For example, the incoming context captures information of the received message (such as the headers), as well as the child service provider that resolves dependencies scoped to the execution of the pipeline, and more. Since the context object captures all the important states, there shouldn’t be any closure allocations happening. Let’s verify that by looking at a simple pipeline.

var behavior1 = new MyBehavior1();

var behavior2 = new MyBehavior2();

var behavior3 = new MyBehavior3();

var context = new RootContext();

await behavior1.Invoke(context, ctx1 => behavior2.Invoke(ctx1, ctx2 => behavior3.Invoke(ctx2, ctx3 => Task.CompletedTask)));

The context is nicely flowing from one execution to the other. However, the lambda needs to access the behavior instance, so that it can call the Invoke method. If we mark these lambdas as static as shown before, we immediately get the familiar CS8820 compiler error.

await behavior1.Invoke(context, static ctx1 => behavior2.Invoke(ctx1, static ctx2 => behavior3.Invoke(ctx2, static ctx3 => Task.CompletedTask)));

error CS8820: A static anonymous function cannot contain a reference to 'behavior2'.

error CS8820: A static anonymous function cannot contain a reference to 'behavior3'.

The compiler error shows us that the NServiceBus pipeline captures the behaviors that are taking part in the pipeline execution as state inside the lambda, which causes closure allocations. To solve this, we need a way to bring the behaviors for each part of the pipeline into the pipeline itself.

🔗Make the behaviors part of the pipeline state

The idea is simple but powerful. Besides being a series of delegates, the pipeline is also essentially a collection of behaviors. So if we can somehow store that collection of behaviors in the context of the pipeline, we’re all set.

The good thing is that once the pipeline execution plan is built, the order of the behaviors is well-known and never changes. So the collection representing all the behaviors including their order can be created once and reused over and over again. Luckily, all behaviors inside the pipeline implement a non-generic marker interface: IBehavior. With that in mind, we can store all behaviors of the pipeline in an array of IBehavior objects in the context.

public class BehaviorContext : IBehaviorContext {

internal IBehavior[] Behaviors { get; set; }

}

// done once at initialization/startup time

var behavior1 = new MyBehavior1();

var behavior2 = new MyBehavior2();

var behavior3 = new MyBehavior3();

var cachedPipeline = new IBehavior[3] { behavior1, behavior2, behavior3 };

var context = new RootContext();

// assigned every time a new context is built

context.Behaviors = cachedPipeline;

Now that all the behaviors can be accessed from the context, the pipeline execution plan builder can build the pipeline invocation plan that will access the behaviors array at a specific index, cast the returned behavior to the right behavior type, and then call Invoke:

await behavior1.Invoke(context, static ctx1 => ((MyBehavior2)ctx1.Behaviors[1]).Invoke(ctx1, static ctx2 => ((MyBehavior3)ctx2.Behaviors[2]).Invoke(ctx2, static ctx3 => Task.CompletedTask)));

That’s it! No more closure allocations. With this simple change, we’ve seen 5 times more throughput in the raw pipeline execution benchmarks and all allocations that previously occurred due to closures are gone.

🔗Summary

Closure allocations can occur in various places whenever there are delegates involved that are accessing state outside the closure (or the curly braces) of the lambda. By removing closure allocations on code that is executed at scale thousands of times per second, it is possible to achieve significant gains in terms of throughput. Since the NServiceBus pipeline is such a core part of our framework, it has to be solid and fast. So we continue to make small but impactful performance improvements to NServiceBus. Hopefully this deeper understand of closure allocations can improve your own code as well.

But removing closure allocations to achieve high-performing and low-allocating code is just one trick in your tool belt. In my recent webinar “Performance tricks I learned from contributing to open source .NET packages” I summarized not only this tip, but others that I’ve learned from contributing over fifty pull requests to the Azure .NET SDK.

If you are interested in hearing more technical details about other improvements, leave a comment here or reach out to me and I’ll tell you how we managed to get another 11-23% throughput improvement in the NServiceBus pipeline execution, or how we got rid of all allocations from the saga persistence deterministic ID creation. Until then, stay safe and allocation free.

Share on Twitter

Share on Twitter